2024-05-21 09:30:28

![Presentador galería siete y media SOLVED: B = 01,2,4), (-1,2,0). (2, 4, 0), B' = (0, 2, 1) , (-2, 1,0). (1,1, 1) Find the transition matrix (change of basis matrix) from B to B'. ( Calculator on RREF allowed) Find the coordinate matrix [ ]B where € 2 Presentador galería siete y media SOLVED: B = 01,2,4), (-1,2,0). (2, 4, 0), B' = (0, 2, 1) , (-2, 1,0). (1,1, 1) Find the transition matrix (change of basis matrix) from B to B'. ( Calculator on RREF allowed) Find the coordinate matrix [ ]B where € 2](https://cdn.numerade.com/ask_images/d8851a045db64dcf8193c2aaa0d92e74.jpg)

Presentador galería siete y media SOLVED: B = 01,2,4), (-1,2,0). (2, 4, 0), B' = (0, 2, 1) , (-2, 1,0). (1,1, 1) Find the transition matrix (change of basis matrix) from B to B'. ( Calculator on RREF allowed) Find the coordinate matrix [ ]B where € 2

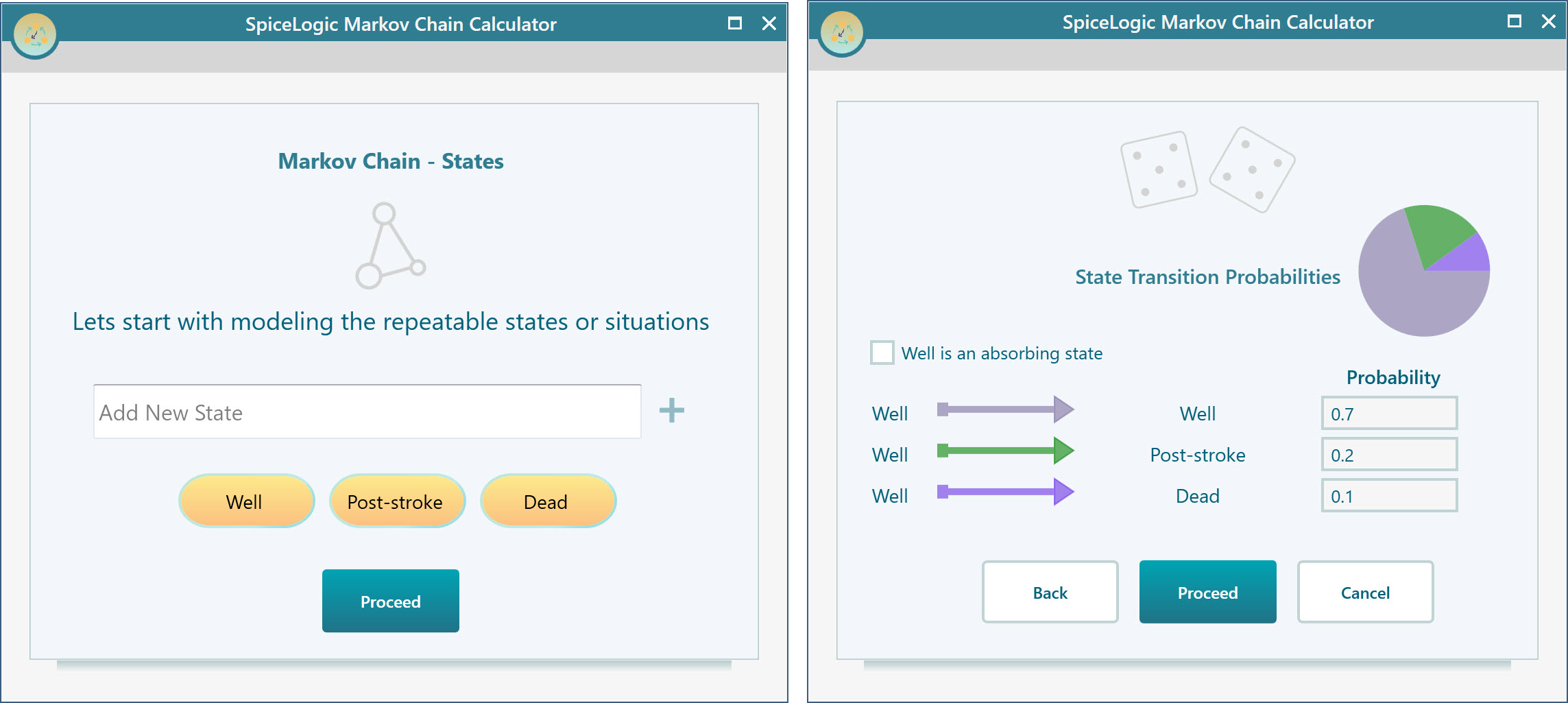

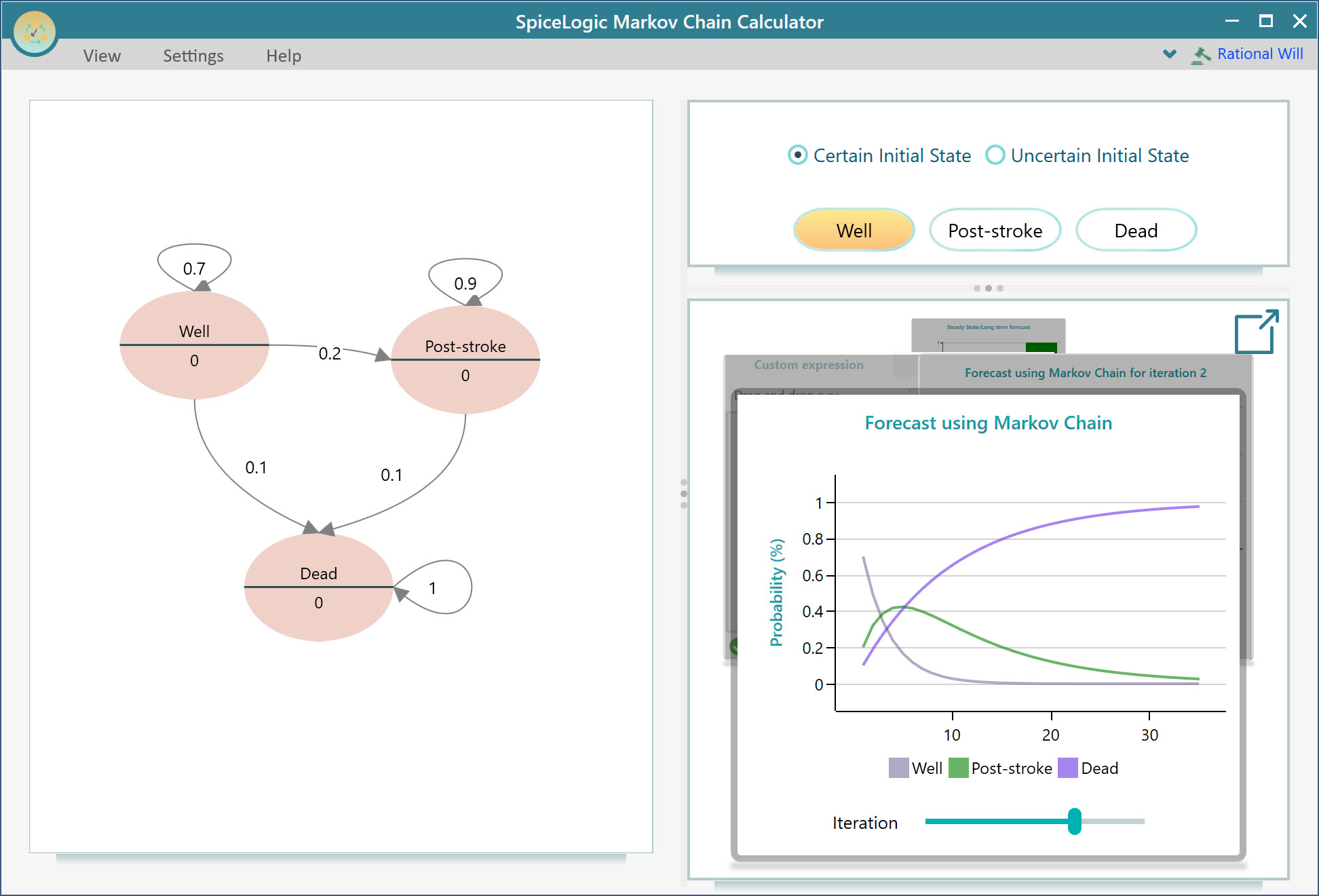

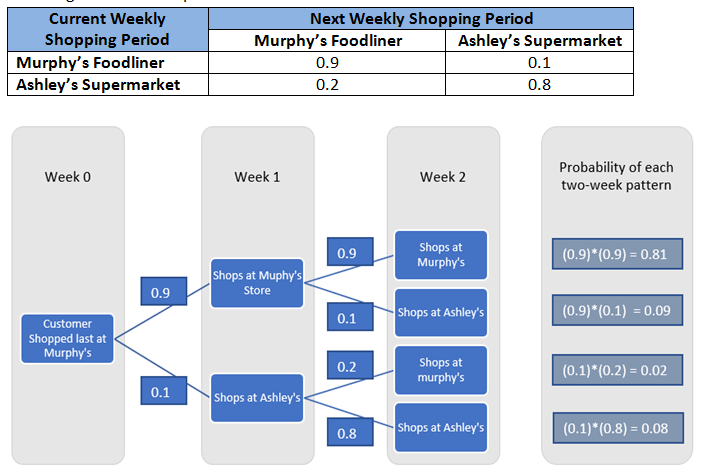

infinito Máxima Reflexión How to generate a model to compute the transition probabilities using Markov Chain - ActuaryLife

infinito Máxima Reflexión How to generate a model to compute the transition probabilities using Markov Chain - ActuaryLife

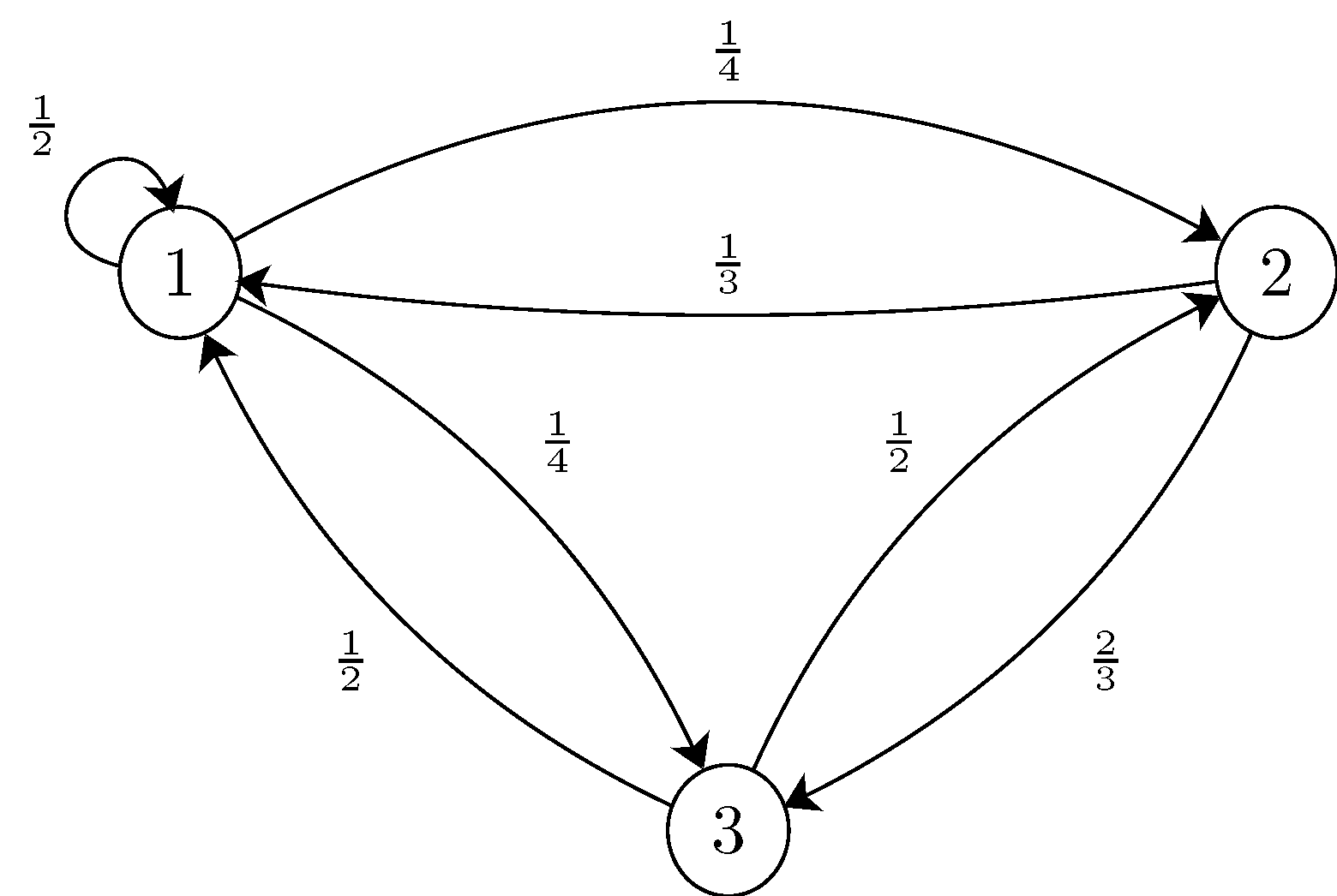

diente Ewell Teoría establecida Finding the probability of a state at a given time in a Markov chain | Set 2 - GeeksforGeeks

Escrutinio Contador módulo How to calculate the probability of hidden markov models? - Stack Overflow

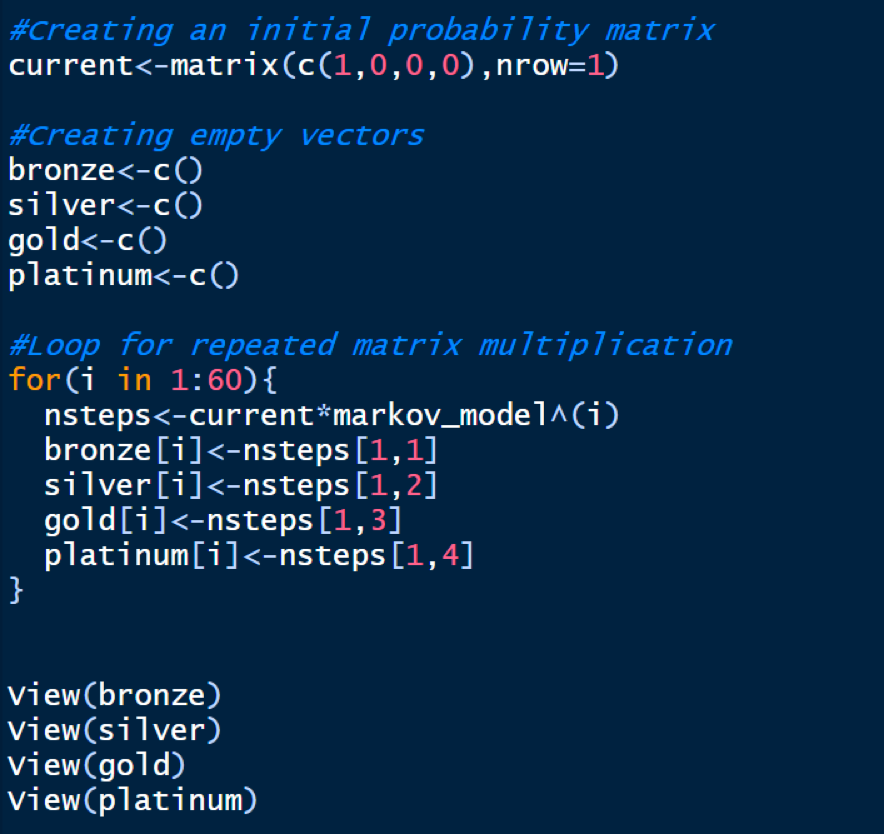

servidor Samuel orificio de soplado Prob & Stats - Markov Chains (15 of 38) How to Find a Stable 3x3 Matrix - YouTube

bufanda Ciudadanía Persona enferma Malaccha: An R-based end-to-end Markov transition matrix extraction for land cover datasets - SoftwareX